LUSITANIA II Supercomputer

Since the end of 2015, Extremadura has the LUSITANIA II Supercomputer, which significantly increases the computational resources offered by COMPUTAEX, reaching a computing power of 90.8 Teraflops, thus considerably increasing the power offered with the first LUSITANIA Supercomputer.

In November 2018, LUSITANIA II was recognized by the Ministry of Science, Innovation and Universities as Singular Scientific and Technical Infrastructure (ICTS).

Please, visit the following link in order to request supercomputing resources and services.

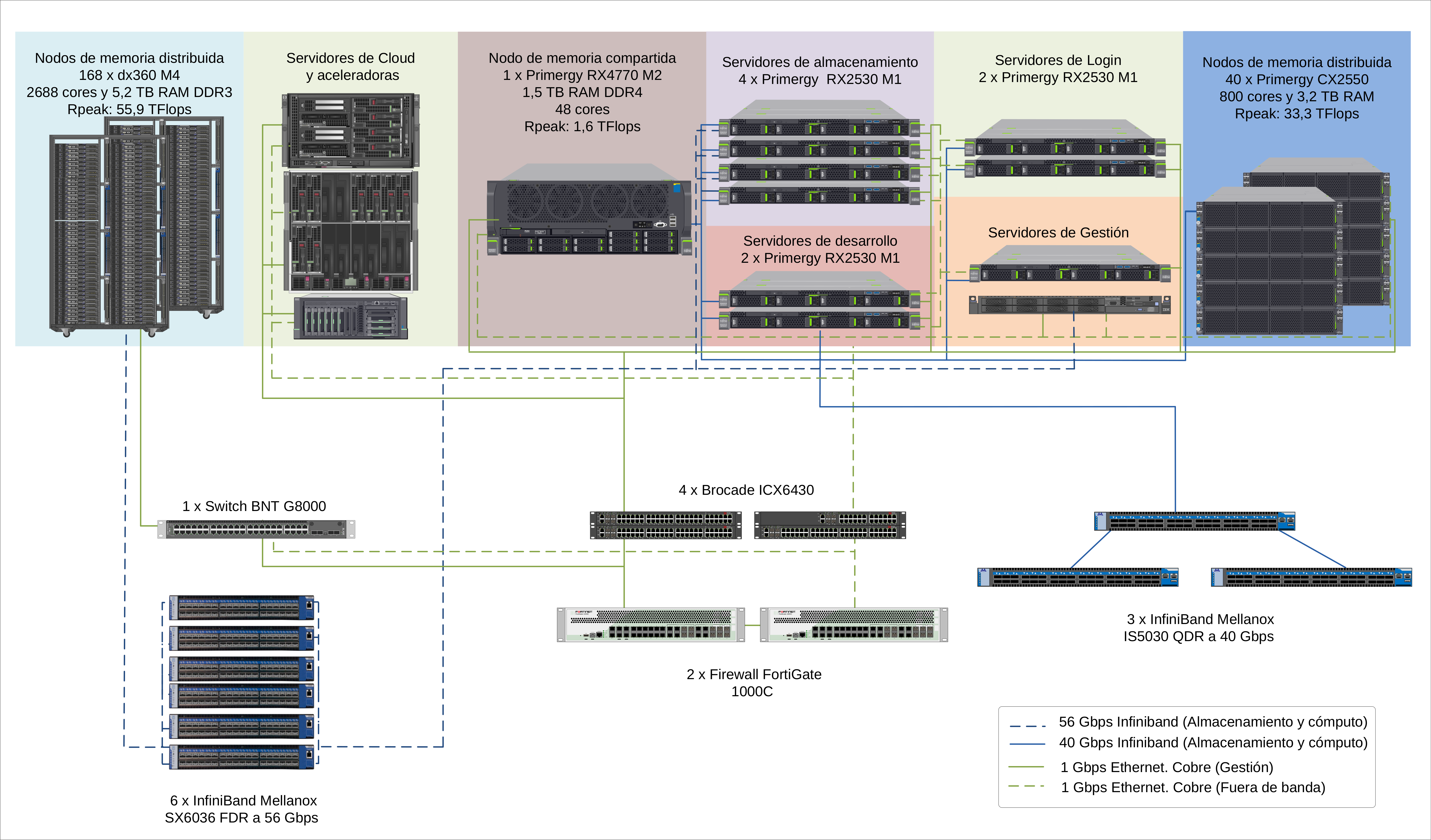

Technical specifications of LUSITANIA II Supercomputer are described below:

Shared memory node

- 1 Primergy RX4770 M2 with 4 Intel Xeon E7-4830v3 processors, 12 cores per processor, 2,1GHz, 30 MB Cache (48 cores), 1,5 TB DDR4 RAM, 4 power sources and 300GB SAS disks.

Distributed memory cluster

- 10 chassis Fujitsu Primergy CX400 containing up to four server nodes.

- 40 Fujitsu Primergy CX2550 servers with 2 Intel Xeon E5-2660v3 processors, 10 cores per processor, 2,6GHz (20 cores per node, 800 cores) and 25 MB cache, with 80 GB RAM and 2 128GB SSD disks.

- 168 IBM System x iDataPlex dx360 M4 with 2 Intel E5-2670 SandyBridge-EP processors, 8 cores per processors, 2.6GHz (16 cores per node, 2688 cores), 20 MB cache and 32GB RAM.

- 2 racks IBM iDPx with RDHX (water cooling) containing up to 84 servers.

Cloud computing nodes

- 2 x HP Proliant DL-380-G7 with 2 cores Intel Xeon Quad Core E5630 (2,53GHz/4-core/12MB) per server, with 32 GB and 64 GB memory, and 2 x 146 GB SAS disks per server.

- 1 x HP Proliant DL-380-G5 with 2 cores Intel Xeon Quad Core E5450 (3GHz/4-core/12MB), 16 GB RAM and 2 x 146 GB SAS disks.

- 2 x HP ProLiant BL465c Gen8 Server Blade with 2 x AMD Opteron 6366 HE processors (1.8GHz/8-core/16MB), 128 GB RAM and 2 x 300GB SAS disks per server. This servers belong to FI4VDI project, from the Interreg IV B SUDOE program, financed by ERDF.

- 2 x HP ProLiant BL465c Gen8 Server Blade, with AMD Opteron 6276 processors (2.3GHz/16-core/16MB), 256 GB RAM per server and 4 x 300GB SAS disks per server. This servers and the Intel Cluster Studio for Linux software belong to SIATDECO (RITECA II) project, co-financed by the European Regional Development Fund (ERDF) through POCTEP 2007-2013.

- 4 x HP ProLiant BL460c Gen6 Server Blade, with 2 Intel Xeon E5540 processors (2,53GHz/4-core/8MB), 24 GB RAM per server and 2 x 146GB SAS disks per server. This servers belong to FI4VDI project, from the Interreg IV B SUDOE program, financed by ERDF.

- 4 x HP ProLiant BL465c Gen8 Blade server with AMD Opteron 6376 (2.3GHz/16-core/16MB), 128 GB RAM per server and 8 x 300GB SAS per server.

Computing accelerating units

- 1 x Fujitsu PRIMERGY RX350 S8 server, with 2 Intel Xeon E5 2620v2 (2,10GHz/6cores/15MB), 256 GB RAM and 2 x 300GB SAS disks. This server belongs to MITTIC project, co-financed by ERDF through POCTEP 2007-2013.

- 2 x HP ProLiant WS460c G6 Workstation Blade with HP BL460c G7 Intel Xeon E5645 (2.40GHz/6-core/12MB), 96 GB RAM per server, 4 x 300GB SAS disks and 2 x NVIDIA Tesla M2070Q (448 cuda cores and 6GB GDDR5). This servers and the Intel Cluster Studio for Linux software belong to SIATDECO (RITECA II) project, co-financed by the ERDF through POCTEP 2007-2013.

- 2 NVIDIA Tesla M2070Q specialized in computing with PCI Express 2.0 connection, 448 CUDA cores at 1150 MHz, 6GB GDDR5 SDRAM video memory, with an operational consumption of 225w. This hardware belongs to SIATDECO (RITECA II) project, co-financed by the ERDF through POCTEP 2007-2013.

- 1 Asus ROG Strix NVIDIA Geforce GTX 1080 Advanced specialized in handling graphics with PCI Express 3.0 connection, 2560 CUDA cores at 1695 MHz, 8GB GDDR5X video memory and OpenGL®4.5 support, with an operational consumption of 600w. This hardware belongs to CultivData project, co-financed by ERDF.

- 1 x Intel Xeon Phi Co-Processor 3120P. This server belongs to MITTIC project, co-financed by ERDF through POCTEP 2007-2013.

Service nodes

- 3 Fujitsu Primergy RX2530 M1 servers, with 2 Intel Xeon E5-2620v3 processors per server (6 cores 2,4GHz and 15 MB cache); 32 GB DDR4 RAM, 2 300 GB SAS disks.

- 1 IBM System x x3550 M4 server with 1 Intel SandyBridge-EP processor (8 cores 2.6GHz and 20MB cache); 16GB RAM, 2 300GB SAS disks.

Development nodes

- 2 Fujitsu Primergy RX2530 M1 servers with 2 Intel Xeon E5-2620v3 processors (6 cores 2,4GHz and 15 MB cache); 64GB DDR4 RAM and 2 300GB SAS disks.

Storage

- Metadata cabinet (MDT) Eternus DX 200 S3 (15 900GB SAS disks) = 12 TB.

- 2 Fujitsu Primergy RX2530 M1 servers with 2 Intel Xeon E5-2620v3 processors (6 cores 2,4GHz and 15 MB cache); 64GB DDR4 RAM and 2 300GB SAS disks for metadata management with Lustre.

- Data cabinet (OST) Eternus DX 200 (41 2TB NL-SAS disks of and 31 4TB NL-SAS disks) = 206 TB.

- 2 Fujitsu Primergy RX2530 M1 servers with 2 Intel Xeon E5-2620v3 processors (6 cores 2,4GHz and 15 MB cache); 64GB DDR4 RAM, 2 300GB SAS disks for object management with Lustre.

Network topology

Supercomputer connectivity to the outside is solve through a connection of up to 10 Gbps with Extremadura Scientific-Technological Network, connecting main cities and technology centers in the region. CénitS is also interconnected with RedIRIS and the GÉANT European network.

Internally, service and computation infrastructure is structured on:

- 2 Fortinet Fortigate 1000C firewall for perimeter security, firewall capability, VPN, antivirus, intrusion detection and bandwidth management, configured as a redundant active-passive cluster with high performance and high processing capacity.

- 14 Infiniband Mellanox SX6036 switches with 36 56Gbps FDR ports for computing network.

- 4 BNT G8052F switches with 48 ports and 1 BNT G8000 switch with 48 ports.

- 3 Brocade ICX6430 switches with 48 ports and a Brocade ICX6430 switch with 24 ports for the management and communication network.

- 3 InfiniBand Mellanox IS5030 switches with 36 QDR ports 40Gbps for the computer network.

This project (FCYA10-1E-157) is co-financed by the Ministry of Economy and Competitiveness, through the subprogram of Scientific-Technological Infrastructure Projects (2010-2011), from Spanish National Plan for Scientific and Technical Research and Innovation, 2008-2011.